Key-Value database has a Big Hash Table of keys and values which are highly distributed across a cluster of commodity servers. Key-Value database typically guarantees Availability and Partition Tolerance.

The key-value database trades off the Consistency in data in order to improve write time.

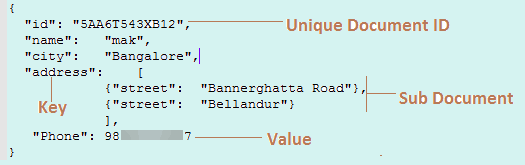

The key in the key-value database can be synthetic or auto-generated which enables you to uniquely identify a single record in the database. The values can be String, JSON, BLOB etc.

Among the most popular key-value database are Amazon DynamoDB, Oracle NoSQL Database, Riak, Berkeley DB, Aerospike, Project Voldemort, IBM Informix C-ISAM.

Application of Key-Value Database – NoSQL Key Value

Let us take some real-life examples where the key-value database is utilized and the benefits they provide.

Managing Web Advertisements

Key-Value databases are mainly used by web advertisement companies.

User’s activity is tracked on web-based, language and location. On the basis of users online activity, web advertisement companies decide which advertisement to show to the user.

It is also important to note that serving advertisement should be fast enough.

It is important to target right advertisement to the right customer in order to receive more clicks and hence to maximize the profits.

Combination of factors such as user’s tracked activity online, language and location determine what a user is interested in forms the key while as all other factors that are needed to serve the advertisement better is kept as the value in key-value databases.

User’s session data retrieval

Your website needs to be efficient and fast to give a user the best service.

How much efficient your database is, if your website runs slow then from a user perspective your entire service is slow.

Websites primarily go slow because of user’s session are handled poorly. Instead of caching the information if every request requires opening a new session then the website will go slow.

User interactions with the website are tracked by the website cookies.

A cookie is a small file which has a unique id that can act as a key in key-value databases. The server uses the cookies to identify the returning users or a new set of users.

The server needs to fetch the data quickly by doing a lookup on cookies. The cookies will give the information about which pages they visit, what information they are looking for and about user’s profile etc.

Key-value stores are, therefore, ideal for storing and retrieving session data at high speeds. The unique Id generated by cookies act as a key while as the other information such as user profiles act a value.